Microsoft Readies Era of NPU Devices with Hybrid Loop, Project Volterra

Devices with neural processors will enable processing to shift from device to cloud.

May 24, 2022

MICROSOFT BUILD — Microsoft is creating deeper integration between Windows and its Azure cloud with the development of Hybrid Loop and Project Volterra. Revealed Tuesday at the Microsoft Build developer conference, the new technology will tap future client devices that have neural processing units (NPUs).

In the future, Microsoft anticipates most client devices will have NPUs, low-powered silicon that can perform AI inferencing and optimization. Microsoft has licensed Qualcomm’s new Neural Processing SDK for Windows, announced by Qualcomm on Tuesday.

Hybrid Loop

NPUs will effectively load-balance the CPU and GPU on Windows devices with Azure compute services. That process would free up the CPU and GPU on a computing device for more compute-intensive processing.

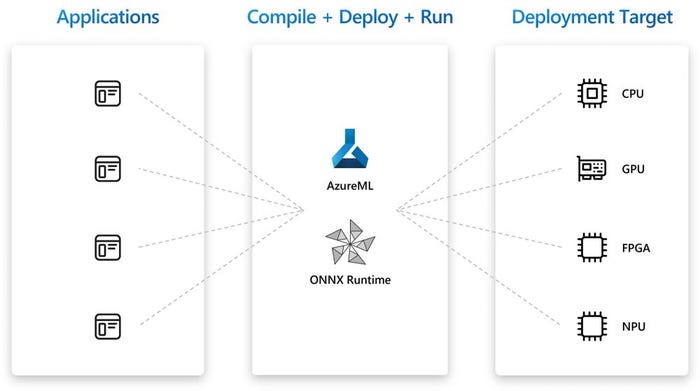

Hybrid Loop is a cross-platform development pattern for building AI capabilities that tap edge and cloud resources, according to Microsoft. Developers can use it to run AI inferencing on local clients or using Azure compute services. Microsoft said it will enable this capability with the release of an Azure Execution Provider in its ONNX Runtime.

Microsoft’s Project Volterra

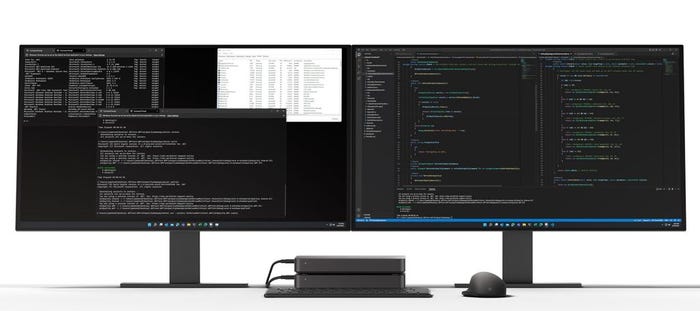

Project Volterra is a prototyping kit designed to enable developers to invoke Microsoft’s AI. The Arm-based kit, powered by Qualcomm’s Snapdragon processor, will enable simulation of applications on devices with NPUs. Microsoft said Project Volterra will let Windows developers build and debug native apps for Arm devices with their preferred tools.

The Apps

Among them are Visual Studio, Windows Terminal, WSL, VSCode, Microsoft Office and Teams. However, Microsoft said the final tooling supported is subject to change. Hybrid Loop and Project Volterra aims to enable new devices and apps that can tap the intelligence of the cloud.

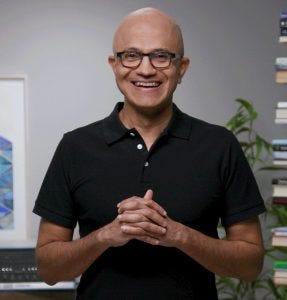

Microsoft’s Satya Nadella at Build 2022

“If there’s one thing that I believe that will surpass the AI supercomputer, it’s the supercomputing capacity that will be distributed across all the devices we carry and use throughout our lives,” Microsoft chairman and CEO Satya Nadella said during the opening keynote at Build, a virtual event this week.

Nadella added: “In this hybrid cloud-to-edge world, you will be able to do large-scale training in the cloud and do inference at the edge and have the fabric work as one. This pattern allows you to make late-binding runtime decisions on whether to run inference on Azure, or the local client. It can also dynamically shift the load between client and cloud.”

Power of NPUs

Hybrid Loop and the Project Volterra kits are possible thanks to the emergence of NPUs, said Kevin Gallo, corporate VP for Microsoft’s Windows developer platform. Gallo described NPUs as pieces of silicon designed to accelerate AI inferencing while consuming much less power than a CPU.

Microsoft’s Kevin Gallo at Build 2022

“Because we will see NPUs being built into most – if not all – future computing devices, we’re going to make it easy for developers to leverage these new capabilities by baking support for NPUs into the end-to-end Windows platform,” Gallo said.

Microsoft plans to deliver Project Volterra to developers later this year.

Native Apps on Arm

Along those same lines, Microsoft is expanding its support for Windows running on Arm-based devices. Currently, existing apps on Arm-based devices running Windows run in emulation mode. Gallo acknowledged that users want native apps.

“Native apps deliver the fastest, most responsive liquid smooth UX, consume less memory storage and I/O and deliver great battery life,” Gallo said. To deliver that capability, Microsoft will offer an Arm-native toolchain with Visual Studio 2022. It will also run with VSCode, Visual C++, .NET 6 and Java, Windows Terminal, Windows Subsystem for Linux (WSL) and Windows Subsystem for Android (WSA) for running Linux and Android apps. Microsoft is aiming to release it before the end of this year.

Techanalysis’s Bob O’Donnell

“Dynamically allocating resources has been a key part of cloud computing architectures since the early days, so it just makes sense to want to apply some of these principles to client computing devices,” wrote Bob O’ Donnell, president and chief analyst at TECHnalysis Research. “One big challenge is that, up until now, applications haven’t been specifically written or optimized to run in these kinds of environments. Part of the Microsoft vision involves using cloud-native development practices to create these AI-powered, hybrid client/cloud apps.”

Want to contact the author directly about this story? Have ideas for a follow-up article? Email Jeffrey Schwartz or connect with him on LinkedIn. |

About the Author(s)

You May Also Like