Google Cloud has expanded committed use discounts to GPUs and introduced capacity reservations.

June 5, 2019

Google Cloud Platform is adding more pricing options for enterprises using its Compute Engine, the latest move to bring greater flexibility and simplicity to the public cloud.

The company is expanding the reach of its committed use discounts beyond CPUs to include GPUs, Cloud TPU (Tensor Processing Unit) Pods and local SSD storage, enabling customers that commit to using the technologies to see discounts of up to 55% off on-demand prices. In addition, Google Cloud introduced capacity reservations for the Compute Engine infrastructure-as-a-service (IaaS) platform component. Through the offering, enterprises can reserve resources in a specific zone and use them later, ensuring they have the capacity needed to handle spikes in demand – think Black Friday or Cyber Monday for retailers – as well as backup and recovery jobs.

The new pricing options are part of a larger push by Google Cloud to reduce the challenge of managing cloud resources and ensuring customers are getting the cloud resources they need for money they’re paying, according to Paul Nash, director of product for Google Compute Engine.

Google Cloud’s Paul Nash

“There’s a lot of innovation happening in cloud in general,” Nash told Channel Futures. “When you’re innovating and adding functionality to a complex product or set of products, if you’re not paying attention, that complexity tends to creep into everyone. One of the things it can creep into is how you’re pricing and positioning the product.”

Pointing to conversations with customers and industry surveys, he noted that pricing and cost management are usually at the top – along with security – of enterprise concerns when it comes to the cloud. A survey by network analytics firm Kentik earlier this year found that while enterprises continue to pursue multicloud strategies, cost management and controls in these increasingly complex environments were a key challenge.

Google Cloud engineers have tried to address cost issues when developing new services, Nash said.

“What we’ve tried to do is think about pricing as just as important a design consideration as how the rest of the product works,” he said. “You shouldn’t have to have a finance degree or a Ph.D. in economics to understand what the correct behavior would be for you to optimize your spend.”

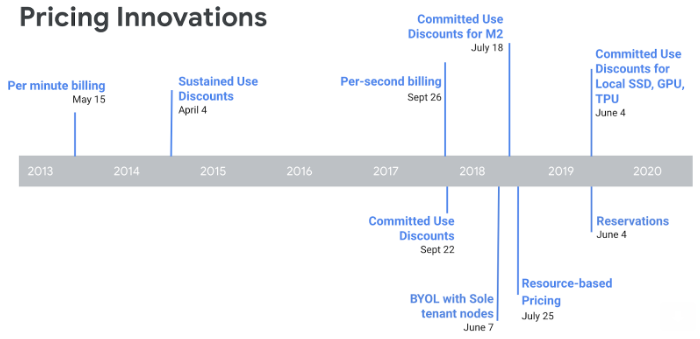

Over the past few years, Google Cloud has introduced a number of new pricing models, including per-minute and per-second billing, committed and sustained use discounts, and resource-based pricing.

The expansion of committed use discounts and the introduction of capacity reservations fall into this push. Extending the committed use discounts to include GPUs, Cloud TPU Pods and local SSDs is designed to ease the burden of managing commitments, particularly as workload demands evolve and change, according to Manish Dalwadi, product manager for Compute Engine.

“You don’t have to tell us the exact shape, the exact zone, the exact operating system [or] the exact networking type,” Dalwadi told Channel Futures. “All you have to do is tell us in aggregate the amount of resources that you want. If you want 100 vCPUs or 100 [Nvidia Telsa] V100 GPUs, you enter those in as an aggregate amount and the discount will apply to any of the [virtual machines] that you’re running that happen to use any of those resources.”

Customers are “able to evolve [their] workload without having to worry about getting locked into things they don’t want or can’t use in the future,” he said.

The discounts apply to …

… Nvidia Tesla K80, P4, P100 and V100 GPUs and all available slice sizes of Cloud TPU v2 Pods and Cloud TPU v3 Pods, and are available in all regions. In addition, the longer the commitment, the deeper the discounts. A one-year commitment can bring a 37% discount, while three years will bring 55%.

Dalwadi said the use of GPUs on Google Cloud is increasing as more customers run greater numbers of artificial intelligence (AI) and machine-learning workloads. In addition, enterprise workloads are evolving and are using more GPUs, Nash said.

Zonal capacity reservations can address spikes in demand and disaster recovery situations, Dalwadi said. Customers can create or delete a reservation at any time, and because they consume resources like any VM, existing discounts users have in hand apply automatically.

The new pricing models are in beta now and will be generally available later, Nash said.

Such flexible pricing models can help customers and channel partners alike, he said. For enterprises, the most successful deployments tend to have a strong partner involved, so Google has at least one partner expert in vertical industries or workload types to help customers. At the same time, the company is building an ecosystem of systems integrators to help enterprises make transition to the cloud.

Endpoint Technologies Associates’ Roger Kay

“There’s a whole scenario around … discovering your workloads, assessing what you’re going to move and then doing the migration, and a key piece of that is trying to understand what you’re going to pay,” Nash said. “We’ve been working to bring more of that awareness and intelligence of how Google’s payment structure works earlier into that process.”

Google Cloud’s efforts around pricing make sense, particularly as the cloud market matures and the differentiators between cloud providers become fewer, Roger Kay, principal analyst with Endpoint Technologies Associates, told Channel Futures.

“All operators have gotten reliability where it should be and they have plenty of bits to sell, so the only thing you can compete on is pricing,” Kay said. “There aren’t that many dials that vendors have to tweak, so pricing becomes important.”

About the Author(s)

You May Also Like