Grace will power the company's first AI-capable supercomputer.

April 14, 2021

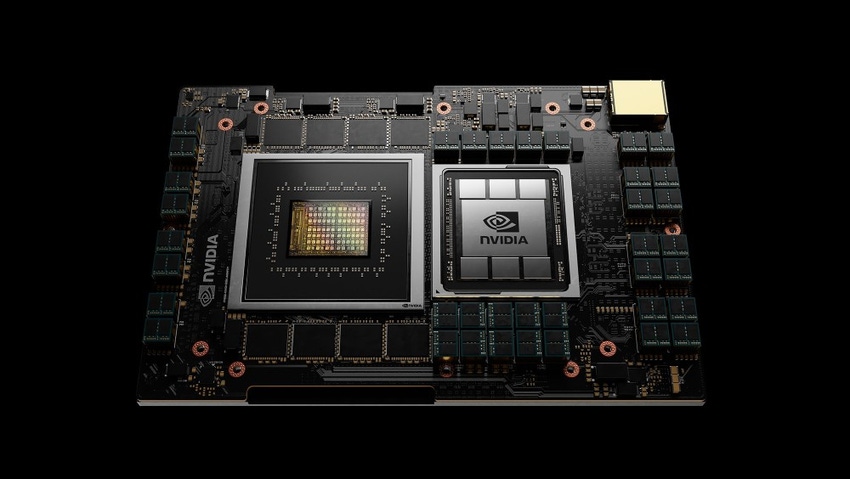

Nvidia is pushing the limits of computing with the introduction of Project Grace, its first data center CPU. Project Grace, revealed at this week’s annual Nvidia’s GTC event, is based on Arm architecture.

Besides marking Nvidia’s foray into offering CPUs, look for Project Grace to extend the limits of Arm-architecture processors. While Nvidia agreed to acquire Arm last summer for $40 billion, that deal is yet to close. Rival Qualcomm is trying to persuade regulators to reject it. Nvidia founder and CEO Jensen Huang said during a media briefing that he is confident regulators will approve it.

“I’m confident that we will still get the deal done in 2022, which is when we expected it in the first place,” Huang said.

Nvidia is licensing the Arm architecture to create the Grace CPU; hence, it’s not dependent on the deal closing, he indicated.

Grace is not a mainstream data center CPU; rather, it is designed for high-performance computing (HPC). Its target is large-scale AI, deep learning and big data analytics, according to Huang.

Nvidia’s Jensen Huang

“Grace is Arm-based, and purpose-built for accelerated computing applications of large amounts of data such as AI,” Huang said during his GTC keynote. “Grace highlights the beauty of Arm. Their IP model allowed us to create the optimal CPU for this application, which achieves X Factor speed-up.”

Furthermore, the new Nvidia CPU will offer a 2400 SPECint CPU speed rate for its next 8-core DGX deep learning server platform, Huang said. That compares to the current DGX, which has a SPECint rate of 450. Huang called that an “amazing increase in system memory bandwidth.”

Named after the computing pioneer Grace Hopper, the CPU will first appear in a supercomputer under development by HPE. Expect the HPE Cray system powered by Grace to go live in 2023. Nvidia said the Swiss National Supercomputing Center and U.S. Department of Energy’s Los Alamos National Laboratory are first in line. HPE claims it’s poised to become the most powerful AI-capable supercomputer in the world.

Impact on Intel and AMD

Huang rejected the notion that Nvidia will be competing with Intel and AMD now that it has created a data center CPU.

“Companies have leadership that are a lot more mature than we give them credit for,” he said.

Here’s our most recent list of new products and services that agents, VARs, MSPs and other partners offer. |

Industry analyst Bob O’Donnell of TECHnalysis Research doesn’t see Project Grace as a challenge to Intel and AMD’s x86 data center CPUs.

Techanalysis’s Bob O’Donnell

“The original implementation goal for Grace is only for HPC and other huge AI-model-based workloads,” O’Donnell noted.

“It is not a general-purpose CPU design,” he added. “Still, in the kinds of advanced, very demanding and memory-intensive AI applications that Nvidia is initially targeting for Grace, it solves the critical problem of connecting GPUs to system memory at significantly faster speeds than traditional x86 architectures can provide.”

BlueField-2 DPUs Now Available

At GTC, Nvidia is showcasing its expanded focus on accelerating AI, deep learning and big data analytics. Nvidia’s data processing units (DPUs) provide a new form of programmable processors that join CPUs and GPUs. DPUs move data throughout a data center to process it, creating what Nvidia describes as a data-centric, accelerated computing model.

Nvidia gained the technology for its DPUs with last year’s $7 billion acquisition of Mellanox. Nvidia announced that its BlueField-2 DPUs are now generally available. The DPUs have 100Gb/s Ethernet or InfiniBand network ports and are available with up to 8 Arm cores. The company also revealed that that next year’s successor DPU – BlueField-3 – will be backward-compatible.

The BlueField-3 DPU will offer 400 Gb/s and provide data center services of up to 300 CPU cores. Nvidia said the BlueField-3 DPUs will create “zero-trust” environments within traditional data center environments. It will also support Nvidia’s new Morpheus application security framework announced at GTC.

Nvidia described Morpheus as a cloud-native platform that uses machine learning to detect and remediate threats that currently are undetectable. Among those threats are leaks of unencrypted data, phishing attempts and malware intrusions. Morpheus with the BlueField-3 DPUs will let organizations protect data center infrastructure from the core to the edge, Nvidia said.

DGX SuperPOD Cloud-Native AI Supercomputer

The BlueField-2 DPUs will power Nvidia’s new DGX SuperPod, which the company claims is the first cloud-native, multi-tenant AI supercomputer. The DGI SuperPods will include at least 20 Nvidia DGX A100s, 5 petaFLOP AI systems with the company’s Tensor Core GPU. Moreover, Nvidia designed the 100s to let customers consolidate training, inference and analytics into a unified AI infrastructure.

Nvidia also launched Base Command, which gives users and administrators secure access to share and operate the DGX SuperPOD infrastructure. Nvidia said Base Command coordinates AI training and operations for globally distributed teams.

Read more about:

VARs/SIsAbout the Author(s)

You May Also Like