New hardware enables myriad capabilities without requiring the cloud, but the consumer market is still way ahead.

Data-driven experiences are rich, immersive and immediate. But they’re also delay-intolerant data hogs.

Think pizza delivery by drone, video cameras that can record traffic accidents at an intersection, freight trucks that can identify a potential system failure.

These kinds of fast-acting activities need lots of data — quickly. So they can’t sustain latency as data travels to and from the cloud. That to-and-fro takes too long; instead, many of these data-intensive processes must remain localized and processed at the edge and on or near a hardware device.

“An autonomous vehicle cannot wait even for a tenth of a second to activate emergency braking when the [artificial intelligence] algorithm predicts an imminent collision,” wrote Northwestern University professor Mohanbir Sawhney in “Why Apple and Microsoft Are Moving to the Edge.” “In these situations, AI must be located at the edge, where decisions can be made faster without relying on network connectivity and without moving massive amounts of data back and forth over a network.”

“AI edge processors allow you to do the processing on the [device] itself or feed into a server in the back room rather than having the processing being done in the cloud,” said Aditya Kaul, a research director at Omdia, a research firm.

AI at the Edge: Enterprise vs. Consumer Adoption

The ability of AI chips to perform tasks such as machine learning inferencing has expanded dramatically in recent years. Consider the graphic processing unit (GPU), which offers more than 10 teraflops of performance, equal to 10 trillion floating-point calculations per second. Modern smartphones have GPUs that can handle billion floating-point operations per second. Even a couple of years ago, this kind of on-device processing wasn’t available. But today, devices at the edge – smartphones, cameras, drones – can handle AI workloads.

Only with the emergence of deep learning chipsets – or artificial intelligence-enabled silicon including GPUs among other chips – has this been possible. And the AI chipset market has taken off like a rocket.

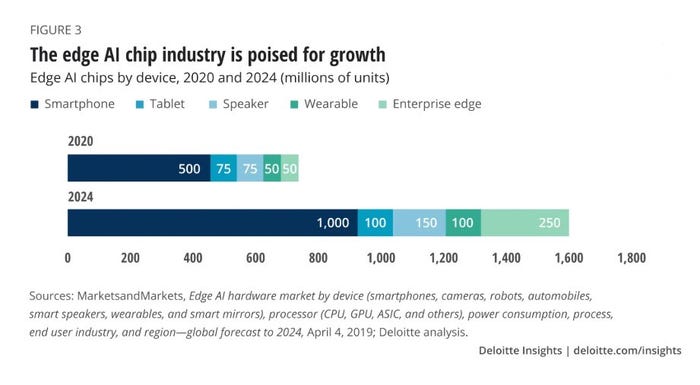

“From essentially zero a few years ago, [edge AI chips] will earn more than $2.5 billion in ‘new’ revenue in 2020, with a 20% growth rate for the next few years,” wrote the authors of the Deloitte report, “Bringing AI to the Device.” [See Figure 3 to the right.]

According to the Omdia report, “Deep Learning Chipsets,” the market for AI chipsets is expected to reach $72.6 billion by 2025.

Experts say the consumer market has paved the way. Today, in 2020, the consumer device market likely represents 90% of the edge AI chip market, in terms of the numbers sold and their dollar value.

“The smartphone market is at the leading edge of this,” said Aditya Kaul, senior director at Omdia, which recently released the report “Deep Learning Chipsets.” Smartphones still represent about 40-50% of the AI chipset market.

But, Kaul said, AI-enabled processing at the edge is coming to the enterprise, in areas such as industrial IoT and retail as well as health care and manufacturing.

“You can call it ‘enterprise-grade AI edge,” Kaul said.

The impetus for enterprise adoption of AI at the edge, Kaul said, is “clarity on use cases.” Machine vision, for example, which automates product inspection and process control, can improve the quality and efficiency of …

… formerly manual processes in areas like an industrial shop floor.

Omdia’s Aditya Kaul

“People are starting to use deep learning [in industrial settings] to identify faults in the auto industry; for example, they can spot defects in the doors, the handles or glass during assembly. In the food and beverage industries, they identify stale tomatoes, or a biscuit factory can identify biscuits that aren’t the right shape,” Kaul said.

In addition to quality control, though, industries are using machine vision to promote new experiences.

“Retail is a massive sector where we see some of this happening,” Kaul said. “It’s enterprise-grade edge and use of cameras in supermarkets for shopper analytics. Where are they idling and looking at certain products?”

AI at the Edge Works with Cloud Computing

AI at the edge has reinvigorated interest in hardware, after several years in which software was king.

But AI at the edge is all about bringing low-latency and distributed hardware can enable processing without aid from the cloud.

“With the growth of AI, hardware is fashionable again, after years in which software drew the most corporate and investor interest,” indicated the McKinsey report, “Artificial Intelligence: The Time To Act is Now.”

Hardware has also brought decentralized computing architecture back in vogue, where centralized architectures involve latency and data security concerns.

“You want the decisions to be made right there and then instead of relying on the latency of the cloud,” Kaul said. “And also, you don’t want data in a third-party cloud. From a security standpoint, the data should stay on-premises.”

Ultimately, experts suggest that AI at the edge will be a complementary architecture to existing cloud computing architecture.

“AI in the cloud can work synergistically with AI at the edge,” wrote Sawhney. “Consider an AI-powered vehicle like Tesla. AI at the edge powers countless decisions in real time such as braking, steering, and lane changes. At night, when the car is parked and connected to a Wi-Fi network, data is uploaded to the cloud to further train the algorithm.”

Expectations of Continued Growth in AI at the Edge

Much of the growth in the AI edge chip market is attributable to increased capability in the hardware itself. But it also involves operational changes in how industries approach AI.

Indeed, while traditional industries such as industrial manufacturing were previously reticent about incorporating artificial intelligence into processes, they now see AI at the edge as beneficial — indeed, a key to ROI. As a result, they are introducing big-data analytics into their processes, training algorithms to improve the accuracy of these processes, and seeing the results in quality control.

“The only way that these models can be accurate is by training them with the right data,” Kaul said. “Two years ago, you wouldn’t have many found people in these sectors where if you asked them about training data, they might look at you in a strange way. But now more people understand how AI works,” he said.

Omdia predicts that this growth will continue, and there will be an “inflection point in 2021-2022,” Kaul said, with a “rapid move toward AI accelerators, ASIC chips.”

Expect the growth to be measured, though, Kaul emphasized.

“A lot of these vendors and markets – in terms of innovation – have been stagnant,” Kaul said. “There hasn’t been much innovation over the last 20, 30 years. So they are generally slow to move. But in some areas, things are picking up — in industrial vision, medical vision and retail. It’s still early. But things are starting to pick up,” he said.

About the Author(s)

You May Also Like